At Haptik, thus far, we’ve developed Conversational AI solutions for a number of enterprises across sectors. Most of the bots we have developed so far have custom use cases which require API integrations. There are some use cases where the bot response is completely dependent on the user input.

Some of the use-cases are as mentioned below:

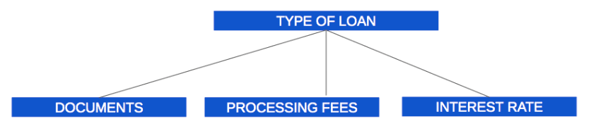

- 1. Documents required for loan type asked by the user.

- 2. List of all available franchisee location for a given pin code.

- 3. Create a ticket in client CRM when user post grievance.

- 4. Calculation of premium for the term or investment plans

- 5. Fetch the test report status

There are more such examples, but for now, I’ll discuss the one use case where the user asks the bot for report status.

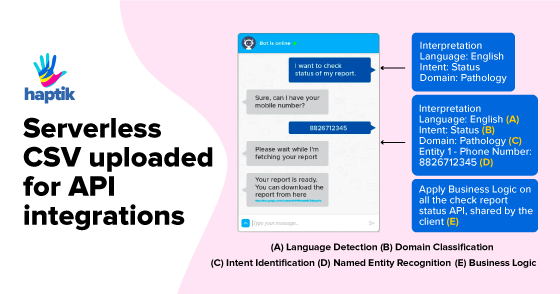

In the above diagram, I’ll walk you through where API integration happens: The moment when our message pipeline receives a message it goes through the following stages:

- 1. Language Detection

- 2. Domain Classification

- 3. Intent Detection

- 4. Name Entity Recognition

- 5. Deep Learning Layer

- 6. Business Logic

- 7. Multi-lingual support for ML models

- 8. Bot personality & tone adaptable to context

- 9. Data preprocessing

- 10. Returns Bot Response

As can be observed from the above diagram, Named Entity Recognition returns entities from the message. In pointer E in the diagram, we use these entities as params to hit the client API, parse the API response, and return the bot response in the required format (Carousel, Quick Replies, Action Button Elements).

Problem Statement

While working with many enterprise businesses we noticed that some enterprises don’t always have APIs for use cases in which data doesn’t change frequently. for eg: franchise location or required document for some loan type.

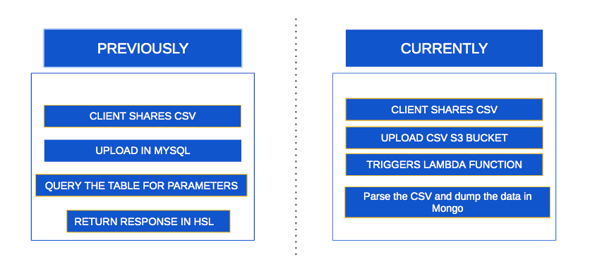

To solve this problem, we used to:

- 1. Ask enterprises to give us CSV dump for the data which we used to upload in MySQL table.

- 2. Query the table for the given parameters.

- 3. Return the response in appropriate HSL(Haptik Specific Language, which we use internally to create UI elements, like a carousel, quick replies, action buttons, etc) format.

But with the above approach, we faced the following problems:

- 1. Repetitive process, for each enterprise. The data fields were different so we were maintaining different SQL tables for each client.

- 2. Frequent code release required on addition/deletion of new data field: Since schema was required to change every time some new data field was added or removed, a new code release was required for that.

- 3. Non-Scalable: considering the number of clients being added this was not a scalable way to handle the problem.

Solution

We moved to a more skeleton based approach and let the values for response etc. be dynamically generated from the bot builder.

We decided to leverage AWS lambda, S3, and MongoDB for this problem.

AWS Lambda is an event-driven, serverless computing platform provided by Amazon as a part of the Amazon Web Services. It is a computing service that runs code in response to events and automatically manages the computing resources required by that code.

- 1. Configure an S3 bucket to trigger a lambda function every time when someone uploads a CSV file.

- 2. Triggered Lambda function which parses the CSV data fields creates the schema, validates the data for each field, uploads the CSV to and dump the data in mongo.

- 3. We have also made use of Lambda Layers which helped us access more packages like Pandas etc.

- 4. Render the error or success response when CSV upload gets completed or send an email accordingly.

Sample code for the lambda function

import os

import logging

import json

import datetime

import boto3

import pandas as pd

import requests

from io import StringIO

from pymongo import MongoClient

from mongoengine import StringField, DateTimeField, Document, connect

from botocore.exceptions import ClientError

SENDER = "parvez.alam@abc.com"

AWS_REGION = "us-west-2"

RECIPIENT = requests.post(

url=os.environ['CONTENT_STORE_URL'],

json={

"message_type": "client.csv_upload.email_recipients"

}).json()

# The subject line for the email.

SUBJECT = "Client CSV Upload Status"

s3 = boto3.resource('s3')

# Set client

client = MongoClient('mongodb://{host}:{port}/'.format(

host=os.environ['DB_HOST'], port=os.environ['DB_PORT']))

# Set database

db = client['test']

# Logger settings - CloudWatch

logger = logging.getLogger()

logger.setLevel(logging.DEBUG)

connect(os.environ['DB_NAME'], host='{host}:{port}'.format(

host=os.environ['DB_HOST'], port=os.environ['DB_PORT']))

Class CSVData(Document):

"""Schema to store hdfc csv data.

"""

field_1 = StringField(max_length=255, required=True)

field_2 = StringField(max_length=255, required=True)

field_3 = StringField(max_length=100, required=True)

field_4 = StringField(required=True)

field_5 = StringField(required=True)

field_6 = StringField(required=True)

field_7 = StringField(required=True)

created_on = DateTimeField(default=datetime.datetime.now)

meta = {

'collection': 'csv_data',

'strict': False,

'auto_create_index': False

}

def send_email(body_text):

"""Send Email

"""

# The character encoding for the email.

CHARSET = "UTF-8"

# Create a new SES resource and specify a region.

client = boto3.client('ses', region_name=AWS_REGION)

# Try to send the email.

try:

#Provide the contents of the email.

response = client.send_email(

Destination={

'ToAddresses': RECIPIENT,

},

Message={

'Body': {

'Text': {

'Charset': CHARSET,

'Data': body_text,

},

},

'Subject': {

'Charset': CHARSET,

'Data': SUBJECT,

},

},

Source=SENDER

)

# Display an error if something goes wrong.

except ClientError as e:

print(e.response['Error']['Message'])

else:

print("Email sent! Message ID:"),

print(response['MessageId'])

##################################################################

##------------- Lambda Handler ---------------------------------##

##################################################################

def lambda_handler(event, context):

"""Event handler method which will get triggered

when new csv file get uploaded for hdfc client

Args:

event (dict) -- Lambda event details

context (obj) -- Context of environment in which this

lambda function is executing

"""

response = {

'status': False,

'message': ''

}

logger.info("Received event: " + json.dumps(event, indent=2))

logger.info("initializing the collection")

logger.info("started")

bucket = event['Records'][0]['s3']['bucket']['name']

key = event['Records'][0]['s3']['object']['key']

#obj = s3.get_object(Bucket=bucket, Key=key)

obj = s3.Object(bucket_name=bucket, key=key)

try:

csv_string = obj.get()['Body'].read().decode('utf-8') # Read csv file as string

except Exception as e:

logger.exception(str(e))

response['message'] = str(e)

send_email("Csv file is invalid")

return response

logger.info("Bucket {} key(s)".format(bucket, key))

df_rows = pd.read_csv(StringIO(csv_string)) # Convert string to dataframes

if df_rows.empty:

message = "uploaded csv is empty"

logger.debug(message)

send_email(message)

logger.debug(df_rows.columns)

column_order = ['List of Columns']

csv_columns = [column for column in df_rows.columns]

logger.debug(csv_columns)

# Before insertion remove the existing data from collection

db.csv_data.remove({})

for index, row in df_rows.iterrows():

data = CSVData(

field_1 = row[0],

field_2 = row[1],

field_3 = row[2],

field_4 = row[3],

field_4 = row[4],

field_5 = row[5],

field_6 = row[6]

)

data.save()

# The email body for recipients with non-HTML email clients.

message = "CSV Upload has been completed and data has been inserted successfully"

send_email(message)

response['status'] = True

response['message'] = 'Data has been inserted successfully'

return response

Advantages:

1. Lesser cost

2. Code release only required when we need to change the MongoDB query.

3. Any person in the company who has access can use the tool and configure the validation, so less developer intervention required.

4. Makes life easier for Bot builders.

Future Scope:

UI tool so anyone can configure the schema and required validation to upload the CSV.

Hope this blog helps you understand how we improved the process of building custom bot solutions for clients and used serverless Technology to achieve the same. We welcome your comments and feedback and would love to hear from you. We are building more solutions like this so follow us. We are hiring. Do visit our careers page.

.webp?width=352&name=Charles-Proxy%20(1).webp)