Elasticsearch has been around for a while now and is being used in almost every other application as a search engine. We have also seen that sometimes it has also been used as a replacement to our traditional Databases, mainly depending on the use-cases are.

Elasticsearch makes searching of key-value pairs from a large amount of data really easy and performs really well. Here’s what Wikipedia has to say about Elasticsearch:

“Elasticsearch is a search engine based on Lucene. It provides a distributed, multitenant-capable full-text search engine with an HTTP web interface and schema-free JSON documents. It also forms a part of a popular log management stack called ELK.

AWS Elasticsearch is Elasticsearch + Kibana provided as a service. AWS manages the nodes and you get an endpoint through which you can access the Elasticsearch cluster.

What have we done for AWS Elasticsearch Auto-scaling at Haptik?

Using get-metric-statistics (AWS CLI) we have pulled data from an AWS CloudWatch metric on AWS and calculated an average for the last 5 minutes. If that average threshold is higher than what we specify, we have used AWS ES CLI to modify our Elasticsearch Cluster domain settings and spin up new nodes to even out the load.

Let’s discuss the scripts one by one. Later you can also go to our GitHub repo, download the scripts, modify according to your use case and put them to use.

Script 1

Explanation: Our web servers query ES (Elasticsearch) for some data and whenever the traffic starts increasing our ES servers slow down due to the load. When the ES servers start slowing down our web servers latency starts going high as each request starts taking more time. At this point, we want to bring up more nodes so that we can keep up with the load. Hence we scale our ES cluster based on the latency of the ELB under which we have our web servers.

The below script is for scaling up the ES nodes based on a metric that we have taken for our use case, i.e. using an ELB metric; Average Latency which is a pre-defined metrics on CloudWatch.

When this script runs, it gets the metric points for the last 5 minutes, takes the average and then decides whether or not the ES nodes need to be scaled up.

#!/bin/bash##################Change ELB name#####################NAME="elb-name"AZ="ap-south-1a"date_end=`date -u +"%Y-%m-%dT%H:%M:%SZ"`#date --date "$date_end -10 min"date_start=`date -u +"%Y-%m-%dT%H:%M:%SZ" --date "$date_end -5 min"`original_cluster_nodes_count=$1##################ELB STEPS###########################################################var=`/usr/local/bin/aws cloudwatch get-metric-statistics --namespace AWS/ELB --metric-name Latency --start-time $date_start --end-time $date_end --period 60 --statistics Average --dimensions '[{"Name":"LoadBalancerName","Value":"'$NAME'"},{"Name":"AvailabilityZone","Value":"'$AZ'"}]' --unit Seconds | awk '{print $2}' `{ echo "${var[*]}"; } > cat.txttotal=`awk '{ SUM += $1} END { print SUM }' cat.txt`var2=$(echo "scale=2; ($total/5.0) *100 "|bc)delay=${var2%.*}#################500 below means 5 seconds################function scale_up (){if [ $delay -ge 300 ]; then#################count increases by 2#####################count=2#####################Replace name by your AWS ES domain name, not URL##################/usr/local/bin/aws es describe-elasticsearch-domain-config --domain-name name > test123.txtinstance_current_count=`cat test123.txt | awk '{print $3}'| awk 'NR==7'`instance_new_auto_count=`expr $count + $instance_current_count`/usr/local/bin/aws es update-elasticsearch-domain-config --elasticsearch-cluster-config "InstanceType=m4.large.elasticsearch,InstanceCount=$instance_new_auto_count" --domain-name namefi}scale_up |

This script is for downscaling the AWS Elasticsearch nodes.

Explanation: To downscale nodes, we are using a similar script which checks the metric value of the ELB metric over the past 5 minutes, takes the average and then decides whether or not to downscale. We scale down our nodes if the latency is lower than the threshold i.e. delay <= 3 seconds (300 mili-seconds). If this condition is true, it will check for the current count of nodes and the actual regular count of nodes which is passed to it as a parameter.

#!/bin/bash#####################CHANGE ELB NAME#########################NAME="ELB-name"AZ="ap-south-1a"date_end=`date -u +"%Y-%m-%dT%H:%M:%SZ"`#date --date "$date_end -10 min"date_start=`date -u +"%Y-%m-%dT%H:%M:%SZ" --date "$date_end -5 min"`original_cluster_nodes_count=$1#######################################################ELB STEPS###########################################################var=`/usr/local/bin/aws cloudwatch get-metric-statistics --namespace AWS/ELB --metric-name Latency --start-time $date_start --end-time $date_end --period 60 --statistics Average --dimensions '[{"Name":"LoadBalancerName","Value":"'$NAME'"},{"Name":"AvailabilityZone","Value":"'$AZ'"}]' --unit Seconds | awk '{print $2}' `{ echo "${var[*]}"; } > cat.txttotal=`awk '{ SUM += $1} END { print SUM }' cat.txt`var2=$(echo "scale=2; ($total/5.0) *100 "|bc)delay=${var2%.*}###########Value of delay == 500 means 5 seconds################function scale_down (){if [ $delay -le 300 ]; then############check current size of cluster###############/usr/local/bin/aws es describe-elasticsearch-domain-config --domain-name name > test123.txtinstance_current_count=`cat test123.txt | awk '{print $3}'| awk 'NR==7'`if [ $instance_current_count -eq $original_cluster_nodes_count ]; thenecho "size okay"elif [ $instance_current_count -gt $original_cluster_nodes_count ]; then/usr/local/bin/aws es update-elasticsearch-domain-config --elasticsearch-cluster-config "InstanceType=m4.large.elasticsearch,InstanceCount=$original_cluster_nodes_count" --domain-name nameecho "Things look okay now. Cluster Scaled down to $original_cluster_nodes_count"fifi}scale_down |

Things you can change in the script:

- Name of ELB, if you plan to use an ELB metric

- Name of metric can even be a custom metric

- Name of ES domain

- Time for checking the metric date points

Now, that we are done understanding the two scripts, let’s see how to use them. Following are the ways we are using them:

- 1. We have put Script 1 (say es_scaleup.sh) in cron or our Jenkins server. You can put it in cron on any server that has access to AWS CLI and right permissions to access your AWS Elasticsearch domain. We are running this script every 7 minutes because it takes around 5-6 minutes for the new nodes to come up:

-

*/7 * * * * bash -x /var/lib/jenkins/scripts/elasticsearch/es_scaleup.sh 6 - -x: Using this to get the output of the script in debug mode. You may or may not use it.

Parameter: “6” is passed as the minimum instance count. It will add the new number of nodes above this count. - 2. Similarly, as above we have put our Script 2 (say es_scaledown.sh) in cron too. We are running this every 30 minutes so as to lower down the nodes count only after things are totally stable.

-

*/30 * * * * bash -x /var/lib/jenkins/scripts/elasticsearch/es_scaledown.sh 6

Parameter: “6” is passed to let this script know the minimum instance count so that it does not scale down lower than that number.

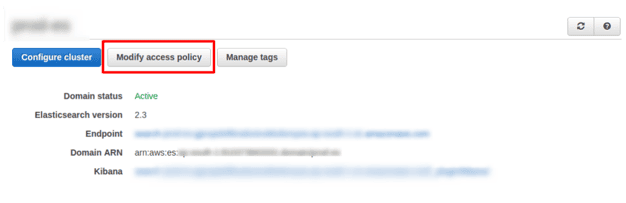

To modify ES via CLI we have to Modify Access Policy of the AWS Elasticsearch domain. It’s as simple as going to ES console and click on Modifying Access Policy:

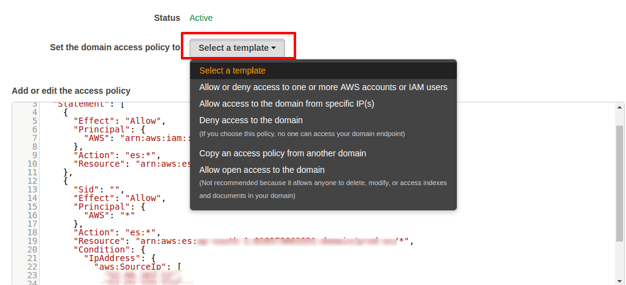

The above opens some options as below:

You can edit the access policy on the above page. Read more about modifying access policy.

You’re all set. Just follow this if you want your AWS Elasticsearch to be auto-healing and auto-scaling. Let me know if you face any issues while making the scripts or implementing this.

Looking forward to hearing from you all. Would love to hear how you guys are handling such use-cases.

Also, don’t forget, we are hiring high-quality engineers. So if you are interested reach out to us at hello@haptik.ai.