Haptik is a conversational AI company and we build Intelligent Virtual Assistant solutions (IVAs) that enhance customer experience and drive ROI for large brands.

When we build these solutions for large brands, we closely integrate with their systems (via APIs) to source relevant information and provide contextual data to a customer interacting with the brand’s IVA (eg: checking available loyalty points, tracking order status, canceling of a prior order, etc)

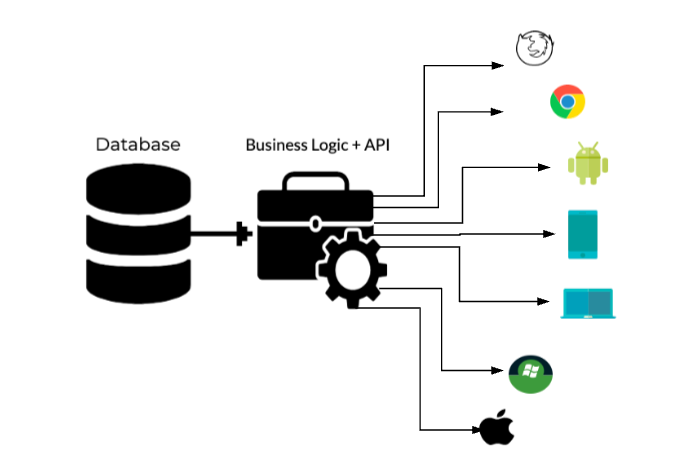

APIs are a software intermediary that allows two applications to talk to each other. In other words, it is the messenger that delivers your request to the provider that you’re requesting it from and then delivers the response back to you. Since we are relying on the client’s systems to relay information back and forth, it is very important for that system to be consistent, reliable, and respect the contract for message delivery.

To deliver a high-quality solution is our goal and we take a lot of measures to ensure the systematic and performant running of our IVAs. But, no solution can be completely problem-free and we’ve had our own share of challenges while integrating with client APIs.

First Problem Statement

We have a support team that is tasked to triage any problem faced by our customers while interacting with the IVA. Quite a few times, after analyzing the issue reported by a customer, we would find that our IVA wasn’t able to answer the customer’s query because of a client API failure:

- Client/Customer API being down

- Client API contract changed

- Client API didn’t return the results within a fixed expected response time

To mitigate this risk when our IVA is dependent on an external system for it to be of high quality, and not have any of our customers experience a degraded product experience, we decided to build a system that would periodically perform proactive quality checks and validate the correctness of a Client API. If the API’s are not working as per the defined SLA, we would notify the client so that corrective action can be taken before a customer experiences a problem.

Second Problem Statement

A few channels that we use to deploy our bots on eg: WhatsApp, Facebook Messenger, can only be tested end-to-end via an API.

Considering the above problems, we decided to focus our efforts on API testing.

What is API testing?

API testing is in many respects like testing software at the user-interface level, only instead of testing by means of standard user inputs and outputs, you use software to send calls to the API, get output, and verify the system’s response.

Pros:

- Time Efficient: API testing is less time consuming than functional GUI testing. It requires less code and thus provides faster test coverage than automated GUI tests.

- Interface independent- API testing: It provides access to the application without a user interface. This ensures testing the application code to the core which helps in building a bug-free tool/application.

- Language-Independent: The data transfer modes used in an API test case is XML or JSON, which are language-independent.

- Cost-Effective: API testing provides faster test results and better test coverage, thus resulting in overall testing cost reduction.

We were looking at multiple tools for our testing and finalized on karate.

Why Karate?

- Karate Framework is the only open-source tool to combine API test-automation, mocks and performance-testing into a single, unified framework

- It is built upon cucumber which is a BDD framework, where we can write tests in normal - English language that anybody can easily understand.

- No coding knowledge required to write tests in karate.

- Easy to maintain.

- You can write custom code in java or JS and use that function/method in your feature file so it is flexible in that way.

Sample test:

In the below test case we are getting the user details from an API and validating the user’s name and user’s country:

Java Runner File:

Feature File with test cases:

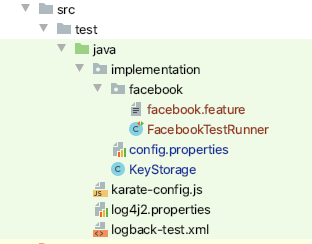

Folder Structure

We created client wise folders containing a runner file (Java Class to run a test) and a feature file with test cases pertaining to that client.

Ensuring the security of client information:

We use tokens as a handshake mechanism and ensure proper authorization with a client system. We work with clients to get environment-specific tokens so that we can reduce the risk when we are testing the client APIs in a pre-production environment vs using the client API in a production environment.

We take security very seriously within our development process and it was critical for us to ensure proper access to these tokens as a part of our framework when we were using it to test a client API. We created a KeyStorage.java class to manage API auth keys or any other secret tokens. In dev/staging, we have a local config.properties file and for production, we use the Environment Keys management tool to securely manage the tokens and ensure no unauthorized access or no exposure to any secrets. Depending on the environment in which you are running the test, the framework will use relevant keys.

KeyStorage.java:

public class KeyStorage {private static Properties prop;private static String FILE_NAME = "config.properties";private static KeyStorage single_instance = null;private static String currentEnvironment;private KeyStorage() { }// static method to create instance of Singleton classpublic static KeyStorage getInstance(String environment) {if (single_instance == null) {single_instance = new KeyStorage();currentEnvironment = environment;if (!"production".equalsIgnoreCase(currentEnvironment)) {try {InputStream input = new FileInputStream(FILE_NAME);prop = new Properties();prop.load(input);} catch (Exception e) {e.printStackTrace();System.out.println("Exception occured while reading from properties file");}}}return single_instance;}public String getValue(String key) {if (!"production".equalsIgnoreCase(currentEnvironment)) {return prop.getProperty(key);} else {return System.getenv(key);}} |

config.properties:

FACEBOOK_USER_API_GUID=sdsdjuhcksidsdsdsduhdjsASDSDFACEBOOK_USER_API_AUTHTOKEN=842djhsgsdg82u= |

karate-config.js:

config.getKeyValue = function(keyName) {var KeyStorage = Java.type('implementation.KeyStorage');var ks = KeyStorage.getInstance(env);return ks.getValue(keyName);}; |

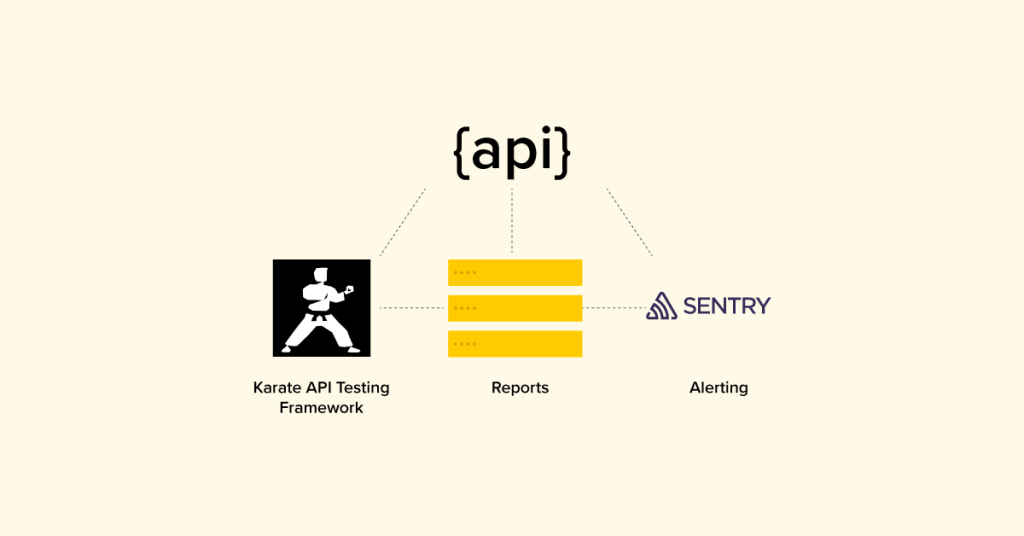

Reporting:

There is a concept of ‘hooks’ in cucumber and it is supported in karate to some extent.

Hooks, which are blocks of code that run before or after each scenario. You can define them anywhere in your project or step definition layers, using the methods @Before and @After. Cucumber Hooks allows us to better manage the code workflow and helps us to reduce code redundancy.

To track the failures of any API, we used an ‘afterscenario’ hook of karate that executes after every test scenario and checks if the karate.info.errorMessage is null or not. If the errorMessage is not null that means the test case is failed.

At Haptik, we use Sentry as Application Monitoring and Error Tracking Software. If any test case fails in production, we log the error message in Sentry and alert relevant teams.

var afterScenarioHook = function() {// the JSON returned from 'karate.info' has the following properties:// - featureDir// - featureFileName// - scenarioName// - scenarioType (either 'Scenario' or 'Scenario Outline')// - scenarioDescription// - errorMessage (will be not-null if the Scenario failed)var info = karate.info;var errorMessage = info.errorMessage;var scenarioName = info.scenarioName;var featureFileName = info.featureFileName;if (info.errorMessage != null) {var msg = "'" + scenarioName + "' in '" + featureFileName + "'failed. Error Message: " + errorMessage;karate.log(msg);var SentryLogger = Java.type('implementation.SentryLogger');var logger = SentryLogger.getInstance(env);logger.sendEvent(msg);} else {var msg = "'" + scenarioName + "' in '" + featureFileName + "' passed";karate.log(msg);}} |

public class SentryLogger {private static SentryLogger single_instance = null;private static String currentEnvironment;private SentryLogger() {}// static method to create instance of Singleton classpublic static SentryLogger getInstance(String environment) {if (single_instance == null) {single_instance = new SentryLogger();currentEnvironment = environment;if ("production".equalsIgnoreCase(currentEnvironment)) {KeyStorage ks = KeyStorage.getInstance(environment);Sentry.init(ks.getValue("SENTRY_DSN"));Sentry.getContext().addTag("environment", environment);}}return single_instance;}public void sendEvent(String message) {if ("production".equalsIgnoreCase(currentEnvironment)) {Sentry.capture(message);} else {System.out.println("[SENTRY] " + message);}}} |

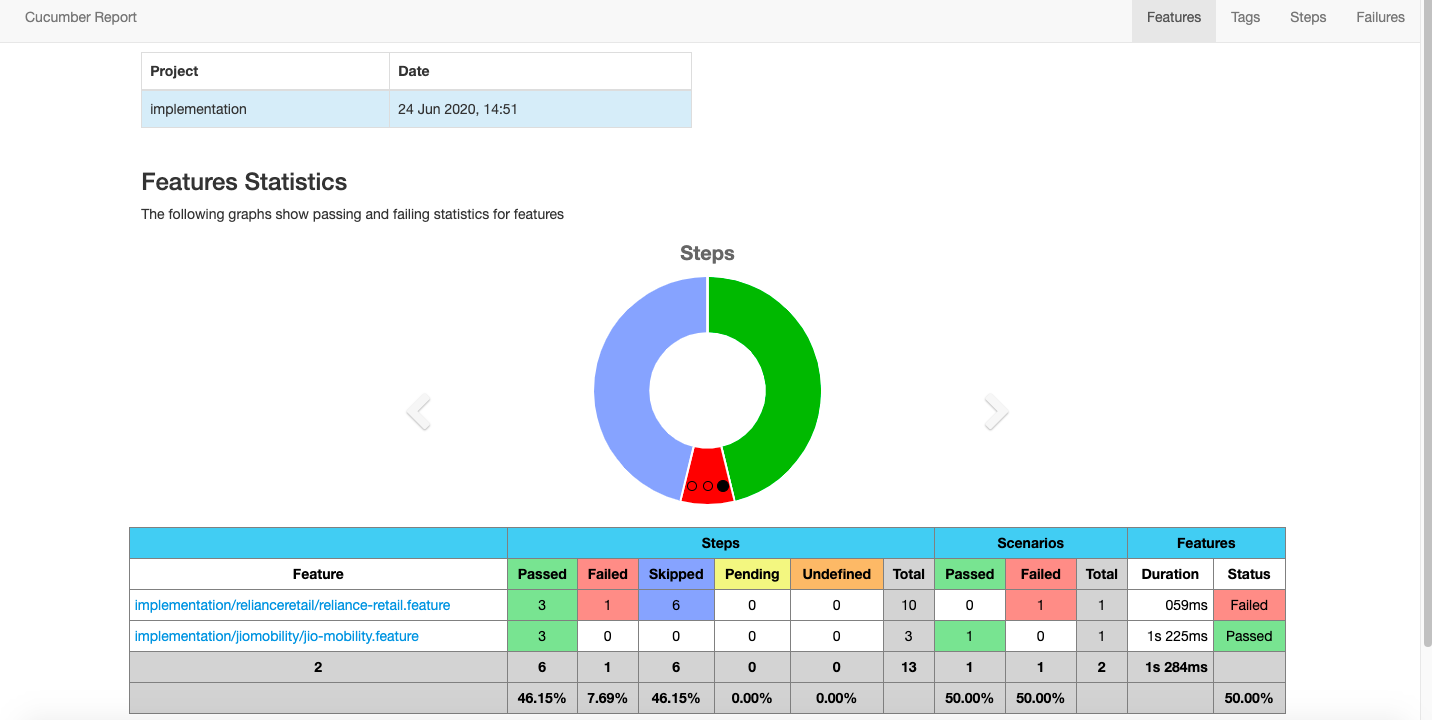

For dev/staging, we would generate an HTML report. The report shows a list of tests that were run from an individual feature file, test completion status, time taken to complete the tests, etc

Running the tests

Manually via the command line

Execute the karate tests for specified runner file:

mvn clean test -Dtest=<name of the runner file>

Karate.options gives an option to run a specific feature file or a folder in which all the feature files are contained

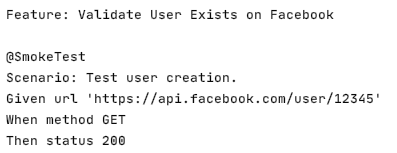

@tags feature allows users to run some particular tests or skip some tests in a feature file. You can specify the tag name in the runner file and karate would execute only that test which has that tag in the feature file and skip the rest.

mvn test -Dkarate.options=”–tags @SmokeTest classpath:src/implementation/facebook/” -Dtest=FacebookTestRunner

Feature file:

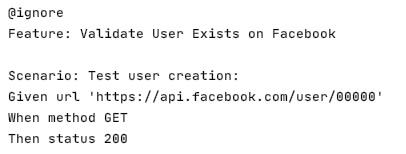

Karate.options gives an option to skip a specific feature file. Eg: @ignore (or any other tag-name) added on top of the Feature File and adding ~@<tag-name> in the command

mvn test -Dkarate.options=”–tags ~@ignore classpath:src/implementation/facebook/” -Dtest=FacebookTestRunner

Users can also specify the environment on which the tests are running and write custom code for each environment in the karate-config.js file.

mvn test -Dkarate.env=<name of the environment> -Dtest=<name of the runner file>

var env;env = java.lang.System.getenv('ENVIRONMENT');if (!env) {env = karate.env; // get system property 'karate.env'if (!env) {env = 'dev';}}karate.log('karate.env system property was:', env);var config = {env: env,myVarName: 'someValue'} |

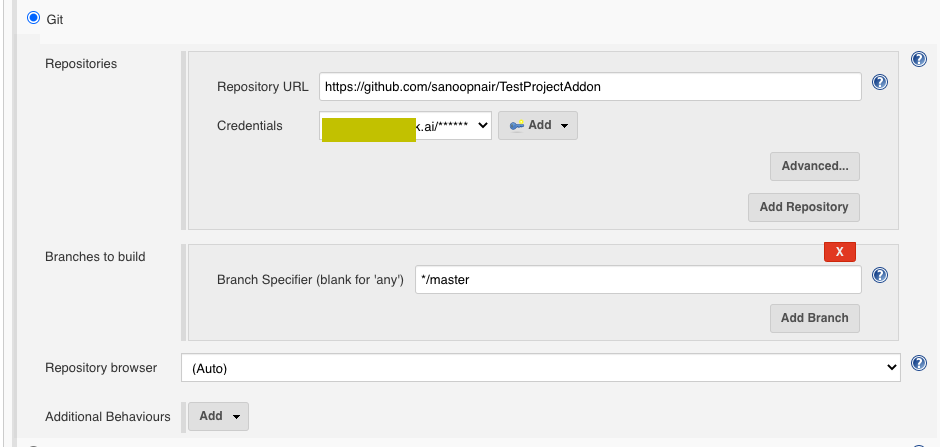

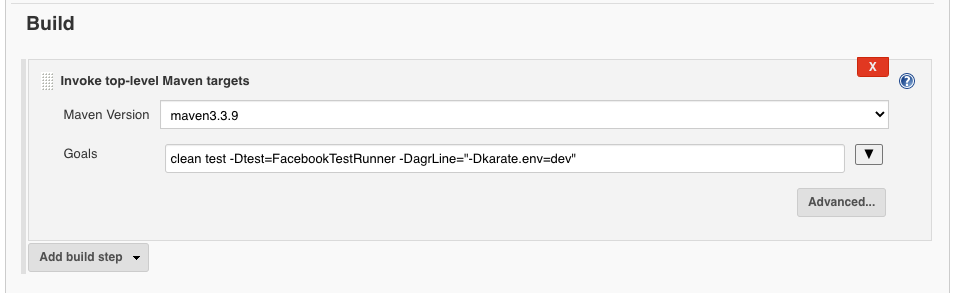

All the above is automated via Jenkins.

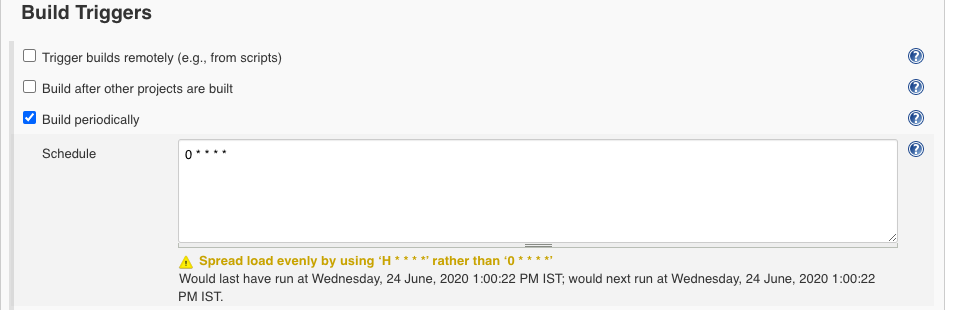

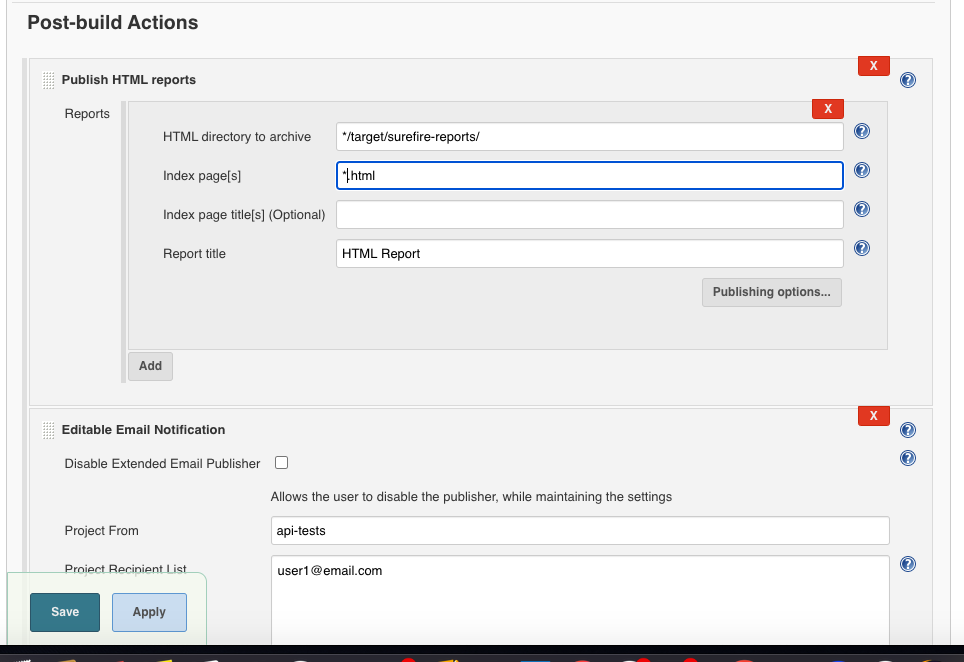

We can also schedule to run the tests periodically using a CI/CD tool. In our case, we used Jenkins to run the tests every hour. The Jenkins job would download the latest version of code from git, run the test, and send the mail attaching the report to the concerned team.

Fetching the latest code from the repository

Triggering the runs every hour

Running the test using maven command

As a part of the Post Execution, we are attaching the test report and sending it via email.

Conclusion:

At Haptik, teams were not using any specific tool for API testing – few APIs were tested using custom tools, some using Postman or some APIs were not being tested at all. Karate makes API testing simple and it is integrated with the BDD framework which makes reading and understanding tests easier. Our plan is to implement karate for all our API testing within engineering. Along with doing production regression testing, we also plan to integrate it with our PR merge process via Jenkins.

This blog was co-authored by @saumil-shah

.webp?width=352&name=Charles-Proxy%20(1).webp)