The more you interact with Generative AI tools like ChatGPT, the clearer it becomes that these tools can wear multiple hats. They can step into the shoes of a customer service rep and craft an empathetic response to pacify a frustrated customer; they can be your coding ninja who effortlessly makes your code bug-free and primed for production; they can be your writing assistant, churning out engaging copies at the passing of an input. They can play so many other roles too... if you feed them with the kind of inputs that inspire them and bring out their versatility. The concept is called prompt engineering.

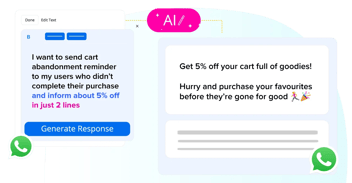

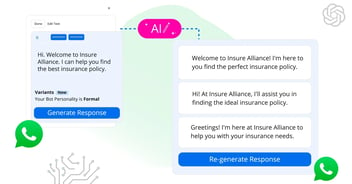

While AI models consistently generate high-quality outputs, there is potential for human intervention to shape these outputs according to specific needs. Prompt engineering is the art of guiding language models to produce contextual and expected outcomes, harnessing their capabilities to align with desired objectives.

With AI becoming a strategic asset for companies to drive innovation and boost productivity, mastering the art of prompt engineering is crucial to realize the full value of Generative AI and maximize its impact.

This blog is a deep dive into prompt engineering, including:

It is the first step in interacting with large language models (LLMs). It involves providing precise and context-rich instructions for Generative AI models to optimize their responses and outputs. This is achieved through carefully selecting words and offering additional context to guide the model towards generating more relevant and accurate responses. More significantly, as LLMs generate large amounts of data that may have biases, hallucinations or inaccuracies - prompt engineering mitigates these issues by refining the inputs provided to the model.

Here’s a basic prompt that might not fetch you the desired output: “Write about automation”.

Here’s a better prompt, with context-rich instructions: “Write an article about the significance of automation for businesses”.

However, not all Gen AI models work alike, and prompts have to be optimized for each model to derive optimal results.

For instance, prompts for OpenAI’s GPT-3 or GPT-4 might need a different approach from writing prompts for another AI-powered large language model, thus requiring a deep understanding of each model, including its training data, architecture, and underlying algorithms.

Prompt Engineering Workflow

Prompt Engineering Techniques for Refined AI Interactions

Prompt engineering is dynamic and evolving concept as new strategies and techniques are developed. Let’s look at a few techniques that enhance the model’s ability to understand and generate contextual and coherent responses.

Zero-Shot Prompting: This technique involves prompting the machine learning model to respond with relevant outputs without providing it with examples. Let’s look at an example of zero-shot prompt: “Summarize the plot of the movie ‘Inception’ in three sentences.” This prompt requires the model to generate a concise summary of the movie Inception without any prior training on summarization tasks or examples related to the movie.

Few-Shot Prompting: Also known as in-context learning, this technique is based on providing sample outputs to the model to train it to generate specific types of responses. Here’s an example of a few-shot prompt: "To do a 'zumple' means to bounce up and down energetically. An example of a sentence that uses the word zumple is:”.

Output: After winning the game, the excited children began to zumple around the playground.

Chain-Of-Thought Prompting: In this technique, the prompt engineer asks the language model to explain its reasoning with the aim of improving its performance on tasks that require logic, decision-making, and calculation by designing the prompt in a way that mimics human reasoning.

Sample Chain-Of-Thought prompt: The shop had a basket of 12 oranges. If it used 7 to make juice, and added five more oranges to the basket, how many oranges does the basket have now?

Output: The basket initially had 12 oranges. If 7 were used to make juice, there would be 12−7 = 5 oranges left. If five more oranges are added, the total number of oranges in the basket becomes 5+5 = 10. So, the basket now has 10 oranges.

Retrieval Augmented Generation (RAG): This is an advanced prompting technique that enhances Generative AI model outputs by leveraging external knowledge sources to enrich the context of the prompt. By incorporating additional information from external databases, documents, or resources - RAG coaxes contextually accurate and relevant responses.

Sample RAG prompt: Retrieve information about the history of the Taj Mahal, including its construction, architectural features, and cultural significance.

Output: The Taj Mahal is an iconic symbol of love and architectural marvel located in Agra, India. Constructed in the 17th century by Mughal Emperor Shah Jahan in memory of his beloved wife Mumtaz Mahal, it features intricate marble carvings, majestic domes, and lush gardens.

Automatic Reasoning & Tool-Use (ART) Prompting: This technique uses algorithms to find logical conclusions from given information, often through deductive reasoning using logical rules and facts to discover new insights.

Sample ART prompt - What are the next three numbers in this sequence: 2, 4, 6, 8, ...?

Output: 10, 12, 14.

8 Best Practices to Craft Effective Prompts

The effectiveness of large language models hinges on the quality of input prompts. The following best practices in prompt engineering can help maximize the accuracy and relevance of generated outputs.

Be Specific: Although LLMs have the capacity to understand a plethora of prompts, specificity is the key to minimizing misinterpretation and ambiguity. By providing detailed and precise prompts, prompt engineers can guide LLMs to produce outputs that align closely with their requirements and intended context. Specific prompts reduce the risk of the model generating irrelevant or misleading responses by offering clear directives and constraints.

Give Examples: When prompt engineers provide specific examples as part of their prompts, large language models get valuable guidance and context to grasp the desired output more effectively. Examples serve as reference points for the LLM, illustrating the tone, style, and content expected in the generated output.

Affirmations: Affirmations are positive statements or directives embedded in prompts to reinforce desired behavior or outcomes in the generated responses. Affirmations help set expectations for the model and clarify the criteria for evaluating the quality of outputs. By articulating specific goals or standards through affirmations, prompt engineers provide a clear framework for assessing the relevance, coherence, and accuracy of the model's responses.

Contextual Data: Including contextual information in prompts helps AI models to generate contextually appropriate, relevant, and accurate responses. For instance, when generating marketing content for a particular demographic, providing demographic data, consumer preferences, or market trends within the prompt can help the model craft persuasive and relevant messaging.

Position in the Prompts: Positioning within the prompts influences the performance of LLMs, as some models provide more weightage to the beginning and end of the prompt. As such, prompt engineers can use the opening segment to provide essential context, specify the task, and outline any constraints or guidelines. Similarly, the concluding portion of the prompt plays a pivotal role in shaping the model's understanding and response generation. Experimenting with variations in prompt positioning helps fine-tune the model's behavior and adapt it to specific use cases or preferences.

User Repetition: LLMs give more attention to an instruction when it is repeated multiple times. LLMs interpret repeated instructions as cues indicating the primary objectives or constraints of the task. By echoing instructions multiple times, prompt designers signal the model to prioritize these directives, ensuring that they are comprehensively understood and adhered to during response generation.

Define Output Structure: When crafting prompts for APIs, the desired output format must be specified to ensure seamless integration with other systems and applications. For example, specifying JSON as the output format enables developers to leverage its lightweight, human-readable, and widely-supported structure for exchanging data between different platforms and programming languages.

Guardrails: Incorporating guardrails into prompt design helps mitigate risks associated with misinterpretation, ambiguity, bias, or malicious data, thereby enhancing the reliability and effectiveness of language models. Guardrails may include specifying the expected format, length, or structure of the generated response, as well as defining boundaries or limitations to ensure compliance with ethical, legal, or regulatory requirements.

Conclusion

As AI models become increasingly accessible and pervasive, prompt engineering is a valuable skill that, if cultivated, can help harness the combined intelligence of humans and machines to tackle complex problems, drive innovation, and unlock new opportunities for growth and advancement. Best practices in prompt engineering help optimize the effectiveness of AI models, ensuring that they understand user intent accurately and generate relevant and coherent responses.