Unlocking Multi-Cloud AI: Securely Connect Azure AKS with Google Vertex AI (No Keys Required)

In 2025, organizations are moving beyond single-cloud strategies. Running containerized apps on Azure Kubernetes Service (AKS) while tapping into Google’s Vertex AI for cutting-edge LLMs and generative AI is becoming the new norm.

We are also cloud-agnostic, and this approach was one of the key challenges we faced. However, we followed proper standards and implemented the necessary security and compliance measures to ensure a robust and secure setup.

But here’s the catch:

Service account keys and static credentials are outdated.

They’re hard to rotate, easy to leak, and a nightmare for compliance teams.

The modern solution?

Identity Federation - a cloud-native way to let your workloads talk securely across platforms without storing secrets.

Why This Matters for Your Organization

- Multi-cloud flexibility: Run apps where it’s cost-effective (Azure), use AI where it’s best-in-class (Google).

- Zero credential risk: No service account keys in GitHub, pipelines, or clusters.

- Compliance-ready: Short-lived, auditable tokens satisfy SOC2, ISO, and GDPR requirements.

- Developer velocity: Teams don’t waste time managing secrets, they just consume APIs securely.

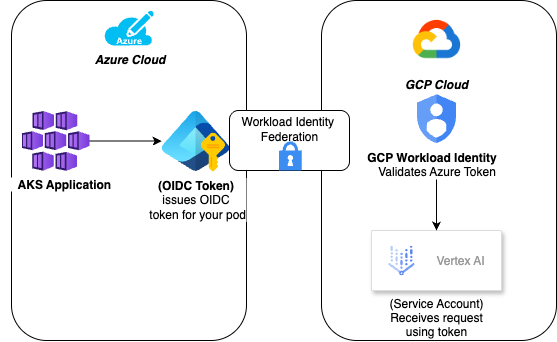

How Identity Federation Works

Instead of generating and storing Google service account keys, your AKS pods:

- Request a token from Azure AD Workload Identity

- Present it to Google Cloud Workload Identity Federation

- Exchange it for a short-lived Vertex AI access token

The result: Your app securely calls Vertex AI APIs without ever touching a static key.

Step-by-Step Setup

Step 1: Enable OIDC Issuer & Workload Identity on AKS

This lets AKS issue tokens that GCP can trust.

az aks update \--resource-group <RG_NAME> \--name <AKS_CLUSTER_NAME> \--enable-oidc-issuer \--enable-workload-identity

Get the OIDC issuer URL:

az aks show \--resource-group <RG_NAME> \--name <AKS_CLUSTER_NAME> \--query "oidcIssuerProfile.issuerUrl" -o tsv

Step 2: Create Workload Identity Pool in GCP

gcloud iam workload-identity-pools create "aks-pool" \--location="global" \--display-name="AKS Pool"

Step 3: Create OIDC Provider in GCP

Replace <OIDC_ISSUER_URL> with the value from Step 1.

gcloud iam workload-identity-pools providers create-oidc "aks-provider" \--workload-identity-pool="aks-pool" \--issuer-uri="<OIDC_ISSUER_URL>" \--attribute-mapping="google.subject=assertion.sub"

Step 4: Create a GCP Service Account for Vertex AI Access

gcloud iam service-accounts create aks-vertex-ai-sa \--display-name "AKS workloads to use Vertex AI"

Grant it least privilege:

gcloud projects add-iam-policy-binding <PROJECT_ID> \--member="serviceAccount:aks-vertex-ai-sa@<PROJECT_ID>.iam.gserviceaccount.com" \--role="roles/aiplatform.user"

Step 5: Bind Workload Identity Pool to the Service Account

gcloud iam service-accounts add-iam-policy-binding \aks-vertex-ai-sa@<PROJECT_ID>.iam.gserviceaccount.com \--role="roles/iam.workloadIdentityUser" \--member="principal://iam.googleapis.com/projects/<PROJECT_NUMBER>/locations/global/workloadIdentityPools/aks-pool/subject/<AKS_POD_IDENTITY>"

Step 6: Configure AKS Pod Identity

In your pod manifest, link your Azure-managed identity:

apiVersion: v1kind: ServiceAccountmetadata:name: aks-app-saannotations:azure.workload.identity/client-id: <AZURE_MANAGED_IDENTITY_CLIENT_ID>

Deploy your workload with this service account.

Step 7: Call Vertex AI from Your Application

Now your AKS app can securely request predictions:

from google.cloud import aiplatformclient = aiplatform.gapic.PredictionServiceClient()response = client.predict(endpoint="projects/<PROJECT_ID>/locations/us-central1/endpoints/<ENDPOINT_ID>",instances=[{"content": "Summarize this report in 5 bullet points"}])print(response)

No keys. No secrets. 100% federated access.

Safe LLM Usage in Enterprise

AI is powerful but also risky if misused. With Vertex AI and identity federation, you can enforce:

✅ Data privacy – Tokenize or mask sensitive data before sending to LLMs

✅ Prompt safety – Block malicious instructions (prompt injections)

✅ Output filtering – Use Vertex AI safety filters for toxicity & PII

✅ Least privilege IAM – Only allow AKS workloads to call Vertex AI, nothing else

Business Benefits at a Glance

| Benefit | Why It Matters |

| No secrets to manage | Reduce breach risk & ops overhead |

| Short-lived credentials | Built-in compliance with SOC2, ISO, GDPR |

| Faster time-to-market | Teams innovate without IAM bottlenecks |

| Multi-cloud advantage | Optimize cost & use best-in-class AI |

| Future-proof | Scales with any AI/ML service you adopt |

Final Thoughts

By combining Azure AKS with Google Vertex AI through identity federation, you get the best of both worlds:

- Azure for enterprise-grade Kubernetes

- Google Cloud for world-class AI/LLMs

- A zero-key, secure, compliant bridge between them

This isn’t just an IT upgrade - it’s a strategic enabler for safe, multi-cloud AI innovation.